Math 50

This schedule is subject to changes throughout the course at my discretion. Readings will be finalized the Friday before they are covered in class. The textbooks references are:

- ER: Evans, Michael J., and Jeffrey S. Rosenthal. Probability and statistics: The science of uncertainty. Macmillan, 2004.

- ISLP: James, Gareth, et al. An introduction to statistical learning: With applications in python. Springer Nature, 2023.

- ROS: Gelman, Andrew, Jennifer Hill, and Aki Vehtari. Regression and other stories. Cambridge University Press, 2021.

Supplementary material: Material in italics is meant to reenforce your understanding of the core course material, but you will not need to know the specific definitions/theorems/examples for the exams.

Regarding my notes: My course notes are meant as a supplement to the textbook readings and other resources. They are not a self-contained reference for the course material and will inevitably contain some mistakes. I recommend using the notes to get an idea of what concepts/examples are emphasized and then reviewing this material in the textbook.

Please see canvas for section specific updates and assignment due dates. This schedule will be updated throughout the term. While I will attempt to stay a few weeks ahead in terms of planning the readings, readings will be finalized roughly one week before the material is covered.

Week 1: Discrete Probability and Monte Carlo Simulations

Topics:

- Familiarity with basic concepts in probability (events, probability distribution) (Monday)

- Independence and conditioning (Wednesday)

- Computation: Basics of Python programming (arrays,

Dataframes(moved to week 3), plotting), The concept of Monte Carlo simulation (Friday)

Class material

- Class notes: Monday (9/16), Wednesday (9/18)

- Colab notebook (9/20)

Reading:

-

ER:

- 1.1 (Intro)

- 1.2 (Probability models)

- 1.3 (Properties of probability models)

- 2.1 (Random variables): Definition 2.1.1

- 1.5/2.8 (Conditional probability): Definition 1.5.1, Theorem 1.5.1, Theorem 1.5.2, Definitions 1.5.2 and 1.5.3

-

ISP:

- 2.3 (python tutorial) -- I use

np.randominstead ofnp.random.default_rng.

- Review the course policies and this schedule

- 2.3 (python tutorial) -- I use

Week 2: iid Sums, Binomial and CLT

Topics:

- Expectations and variances, conditional expectation (Monday)

- Binomial distribution, LLN (Monday)

- Computation: Monte Carlo simulation, histogram, numerical illustration of CLT (Wednesday)

- Continuous probability distributions and probability density , Central Limit Theorem and Normal distribution (Friday)

Class material

- Class notes:

- Code:

Reading:

- ER:

- 3.1 and 3.2 (Expectations)

- 3.5 (conditional expectation)

- 3.3 (Variance and covariance)

- 2.3 (Discrete distributions)

- 2.4 (Continuous)

- 4.2.1/4.4.1 (Law of large numbers/Central Limit Theorem): You will not need to know the more technical definitions in the textbook, only the intuition behind these results. The CLT theorem video referenced below is extremely helpful for this.

Assignments due:

Week 3: Working with Normal RVs, Least squares LR

Topics:

- Properties of Normal random variables (Monday)

- Single-predictor regression as conditional model (Monday)

- Correlation coefficients, R-squared,

regression to the mean(Wednesday) - Least squares (Wednesday)

- Computation: Simulating regression models and working with tabular data (Dataframes) (Friday)

Class material

- Class notes:

- Code:

Reading:

- ER:

- 4.6 (Properties of Normal distribution)

- Definition 3.3.3 covariance

- 10.1 (related variables): Example 10.1.1

- 10.3.2 (Simple lineage regression model): Example 10.3.3. Use slightly different notation (e.g. instead of b I write a hat over the regression coefficient to indicate its estimate). You can skip Theorem 10.3.2, 10.3.3 and 10.3.4 for now.

Assignments due:

- HW2

- HW1 Self-evaluation

Week 4: Other aspects of single predictor LR

Topics:

- Computation: Finish regression examples in python, coefficient of determination

- More on coefficient of determination, estimators, standard error (Wednesday/Friday)

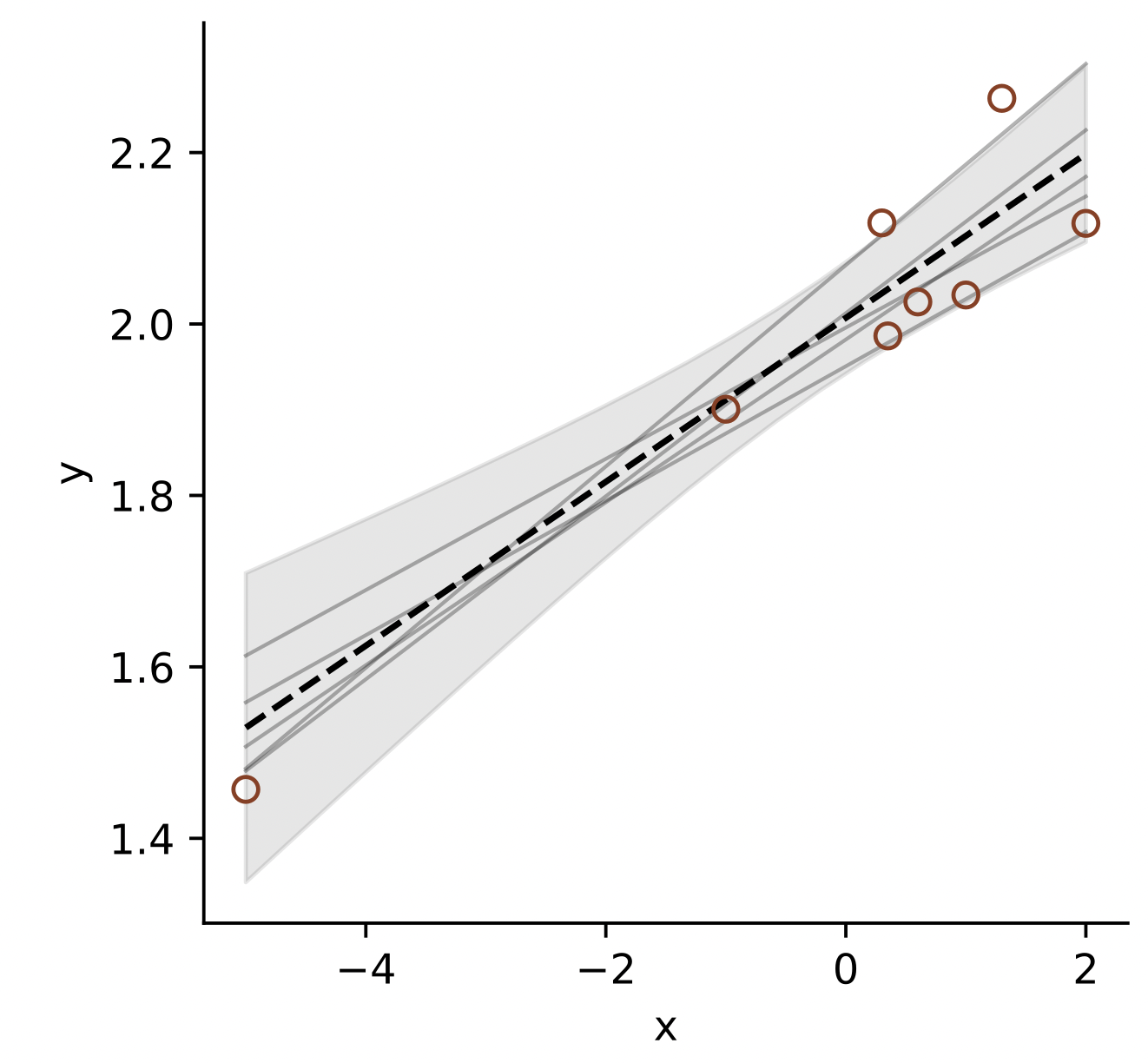

- Computation: regression with

statsmodels, visualizing confidence intervals in regression (Friday)

Reading:

- ROS

- Chapter 4: Read the entire chapter (it's not too technical), but 4.2 and 4.4 are especially important.

- ER (OPTIONAL): These are optional if you would prefer a more technical treatment.

- 6.1 and 6.3

- ISP (OPTIONAL): This is helpful if you would like additional examples in Python.

- 3.1 (Linear regression)

Class material

- Class notes:

- Code:

Assignments due:

- HW3 Due date pushed to Week 5

- HW2 Self-evaluation

Week 5: Hypothesis testing for LR MIDTERM (Oct 16)

Topics:

- Midterm review (Monday)

- Introduction to regression with multiple predictors (Friday)

- Computation: $p$-values, Performing multivariate regression in

statsmodelsand data visualization (Friday)

Reading:

- No new reading

Assignments due:

- Midterm

- HW3

Week 6: Multiple predictor LR I

Topics:

- No class monday

- Effects of adding predictors to regression models (Wednesday)

- Interpreting regression coefficients and model building considerations (Wednesday)

- Computation: Examples in python (Wednesday)

Reading:

- ROS:

- Ch. 10: Ignore the r code and skip 10.5,10.8 and 10.9

- ISP (OPTIONAL): This is helpful if you would like additional examples in Python beyond my notebooks.

- 3.1 (Linear regression)

Class material

- Class notes:

Assignments due:

- No HW due

- HW3 Self-evaluation

Week 7: Multiple predictor LR II

Topics:

- Simpsons paradox (Monday)

- Catagorical predictors/dummy variables (Monday/Wednesday)

- Interactions (Wednesday)

- Computation: Hands on examples in statsmodels

Reading/notes:

- My typed notes

- Monday (10/28) CLASS

- Wednesday (10/30) CLASS

- ISP: Sections 3.3.1 and 3.3.2

- Regression and Other stories

- Section 12.1 and 12.2 (linear transformations of predictors)

- Section 11.3 (residual plots)

Assignments due:

- HW4 (deadline extended -- see canvas)

- No Self-evaluation due

Week 8: Model assessment and nonlinear models

Topics:

- Bias variance tradeoff, overfitting, double descent (Monday)

- Cross validation (Monday)

- Regularization (Wednesday/Friday)

- Laplace rule of succession

Reading/notes:

- My typed notes from 2023 (DRAFT -- covers cross validation, overfitting, Fourier series) Colab notebook is linked within.

- Monday (11/4) CLASS

- Wednesday/Friday (11/6 and 11/8) CLASS (I ended class on Friday with some examples of regularization)

- ISLP: Sections 2.1 and 2.2 (overfitting and bias variance tradeoff)

- ISLP: Section 6.2 (regularization)

-

ISLP: Sections 4.1, 4.2, 4.3 (logistic regression) - ISLP: There is a section on cross validation but it might be a bit confusing since we didn't cover logistic regression

- Regression and Other Stories: Section 11.8 (optional additional explanation of CV)

Additional resources

Assignments due:

- HW4 Self-evaluation

Week 9/10: Fourier models, Bayesian Inference, other topics

Topics:

- Fourier models/time series data (Monday)

- Priors (Wednesday)

- Laplace rule of succesion from Bayesian perspective (Wednesday)

- Relationship between bayesian linear regression and regularization

- The kernel trick, other topics?

Reading/notes:

- Wednesday (11/15) CLASS

- ER:

- 7.1 (Priors and posterior) -- Focus on example 7.1.1 and try following/reproducing the calculations with alpha=beta=1 (so the beta distribution, which we haven't discussed, becomes a uniform distribution which you are very familiar with).

- 10.3.3 (Bayesian linear regression) -- Optional

- ISLP: Section 6.2, subsection titled "Bayesian Interpretation of Ridge Regression and the Lasso"

Additional resources

- Bayesian inference for bernoulli trials

- Bayesian inference (from 3Blue1Brown, there are many other great videos on youtube)

- Fourier series (from 3Blue1Brown), just for fun

- Double descent